Introduction to EMC

A passenger jet explodes in mid-air killing all 230 people on board. A hospital syringe pump spontaneously ceases its delivery of life-preserving medication without triggering any alarms. A nuclear power plant goes on alert status when turbine control valves spontaneously close. Each of these actual events was a symptom of an electromagnetic compatibility problem.

Electromagnetic compatibility (EMC) is broadly defined as a state that exists when all devices in a system are able to function without error in their intended electromagnetic environment. In 1996, TWA Flight 800 bound from New York to Paris exploded over the ocean shortly after take-off. After a lengthy investigation that involved salvaging and reconstructing major portions of the aircraft, it was concluded that the most probable cause of the explosion was a spark in the center wing fuel tank that ignited the air/fuel mixture. This spark was likely the direct result of a large voltage transient, possibly a power line transient or electrostatic discharge.

In 2007, the results of a study conducted by researchers at the University of Amsterdam documented nearly 50 incidents of electromagnetic interference from cell phone use in hospitals, and classified 75 percent of them as significant or hazardous. Another study, published in 2008 by researchers from Amsterdam, showed that electromagnetic interference from RFID devices also had the potential to cause critical care medical equipment to malfunction.

Spontaneous valve closures at the Niagara Mohawk Nine Mile Point #2 nuclear power plant were due to interference generated by workers' wireless handsets. Despite the tremendous emphasis on safety and security that is placed on the design and construction of all nuclear power plants, the relatively weak emissions from common wireless handsets resulted in a major malfunction.

Unfortunately, these are not rare isolated occurrences. Electromagnetic compatibility problems result in many deaths and billions of dollars in lost revenue every year. The past decade has seen an explosive increase in the number and severity of EMC problems primarily due to the proliferation of microprocessor controlled devices, high‑frequency circuits and low‑power transmitters.

Elements of an EMC Problem

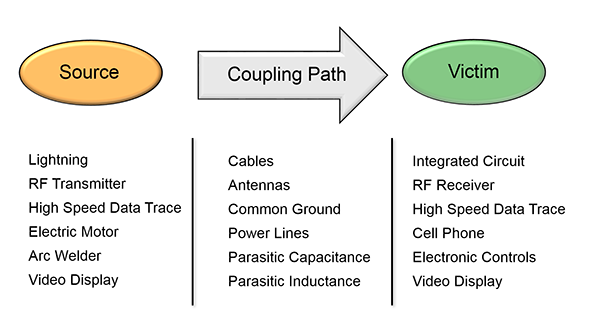

There are three essential elements to an EMC problem as illustrated in Figure 1. There must be a source of electromagnetic energy, a receptor (or victim) that cannot function properly due to the electromagnetic energy, and a path between them that couples the energy from the source to the receptor. Each of these three elements must be present, although they may not be readily identified in every situation. Electromagnetic compatibility problems are generally solved by identifying at least two of these elements and eliminating (or attenuating) one of them.

Figure 1. The three essential elements of an EMC problem.

For example, in the case of the nuclear power plant, the receptor was readily identified. The turbine control valves were malfunctioning. The source and the coupling path were originally unknown; however an investigation revealed that wireless handsets used by the plant employees were the source. Although at this point the coupling path was not known, the problem was solved by eliminating the source (e.g. restricting the use of low‑power radio transmitters in certain areas). A more thorough and perhaps more secure approach would be to identify the coupling path and take steps to eliminate it. For example, suppose it was determined that radiated emissions from a wireless handset were inducing currents on a cable that was connected to a printed circuit card that contained a circuit that controlled the turbine valves. If the operation of the circuit was found to be adversely affected by these induced currents, a possible coupling path would be identified. Shielding, filtering, or rerouting the cable, and filtering or redesigning the circuit would then be possible methods of attenuating the coupling path to the point where the problem is non‑existent.

When a Roosevelt Island Tramway car suddenly sped up at the end of the line and crashed into a concrete barrier, the problem was thought to be transients on the tramway's power. The coupling path was presumably through the power supply to the speed control circuit, although investigators were unable to reproduce the failure so the source and coupling path were never identified conclusively. The receptor, on the other hand, was clearly shown to be the speed control circuit and this circuit was modified to keep it from becoming confused by unintentional random inputs. In other words, the solution was to eliminate the receptor by making the speed control circuit immune to the electromagnetic phenomenon produced by the source.

Potential sources of electromagnetic compatibility problems include radio transmitters, power lines, electronic circuits, lightning, lamp dimmers, electric motors, arc welders, solar flares and just about anything that utilizes or creates electromagnetic energy. Potential receptors include radio receivers, electronic circuits, appliances, people, and just about anything that utilizes or can detect electromagnetic energy.

Methods of coupling electromagnetic energy from a source to a receptor fall into one of four categories.

- Conducted (electric current)

- Inductively coupled (magnetic field)

- Capacitively coupled (electric field)

- Radiated (electromagnetic field)

Coupling paths often utilize a complex combination of these methods making the path difficult to identify even when the source and receptor are known. There may be multiple coupling paths, and steps taken to attenuate one path may enhance another.

A Brief History of EMC

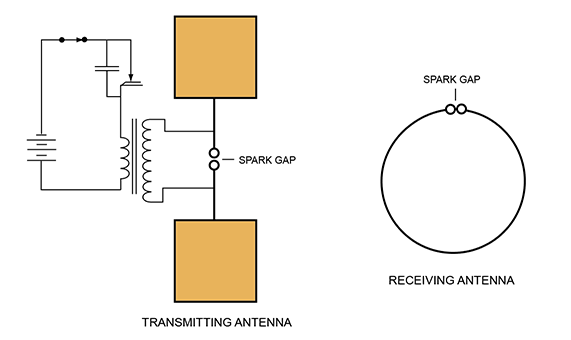

In the late 1880's, the German physicist Heinrich Hertz performed experiments that demonstrated the phenomenon of radio wave propagation, thus confirming the theory published by James Clerk Maxwell two decades earlier. Hertz developed a spark in a small gap between two metal rods that were connected at the other end to metal plates, as shown in Figure 2. The spark excitation created an oscillating current on the rods resulting in electromagnetic radiation near the resonant frequency of the antenna. The receiving antenna was a loop of wire with a very thin gap. A spark in the gap indicated the presence of a time‑varying field and the maximum spark gap length provided a measurement of the received field's strength.

Figure 2. Early antennas constructed by Heinrich Hertz.

Guglielmo Marconi learned of Hertz's experiments and improved upon them. In 1895, he developed the wireless telegraph, the first communications device to convey information using radio waves. Although the significance of his invention was not initially appreciated, the U.S. Navy took an interest due to the potential of this device to enhance communication with ships at sea.

In 1899, the Navy initiated the first shipboard tests of the wireless telegraph. While the tests were successful in many ways, the Navy was unable to operate two transmitters simultaneously. The reason for this problem was that the operating frequency and bandwidth of the early wireless telegraph was primarily determined by the size, shape and construction of the antenna. Receiving antennas were always "tuned" (experimentally) to the same operating frequency as the transmitting antenna, however the bandwidth was difficult to control. Therefore when two transmitters were operating simultaneously, receivers detected the fields from both of them to some extent and the received signal was generally unintelligible. This early electromagnetic compatibility problem came to be referred to as Radio Frequency Interference (RFI). As the popularity of the wireless telegraph grew, so did the concern about RFI.

In 1904, Theodore Roosevelt signed an executive order empowering the Department of Commerce to regulate all private radio stations and the Navy to regulate all government stations (and all radio stations in times of war). Different types of radio transmitters were assigned different frequency allocations and often were only allowed to operate at certain times in order to reduce the potential for RFI.

By 1906, various spark‑quenching schemes and tuning circuits were being employed to reduce the bandwidth of wireless transmitters and receivers significantly. However, it was the invention of the vacuum tube oscillator in 1912 and the super heterodyne receiver in 1918 that made truly narrow band transmission and reception possible. These developments also made it possible to transmit reasonably clear human speech, which paved the way for commercial radio broadcasts.

The period from about 1925 to 1950 is known as the golden age of broadcasting. During this period the popularity of radio soared. As the number of radios proliferated, so did the electromagnetic compatibility problems. RFI was a common problem because the regulations governing intentional or unintentional interference with a commercial radio broadcast were lax and more people had access to radio equipment. In order to alleviate this problem, the Federal Communications Commission (FCC) was established in 1934 as an independent agency of the U.S. Government. It was empowered to regulate U.S. interstate and foreign communication by radio, wire, and cable. FCC regulations and licensing requirements significantly reduced the number of radio frequency interference problems.

However, due to the increasing number of radio receivers being located in homes, the general public was introduced to a variety of new EMC problems. Unintentional electromagnetic radiation sources such as thunderstorms, gasoline engines, and electric appliances often created bigger interference problems than intentional radio transmitters.

Intrasystem interference was also a growing concern. Super heterodyne receivers contain their own local oscillator, which had to be isolated from other parts of the radio's own circuit. Radios and phonographs were lumped together in home entertainment systems. Radios were installed in automobiles, elevators, tractors, and airplanes. The developers and manufacturers of these systems found it necessary to develop better grounding, shielding, and filtering techniques in order to make their products function.

In the 1940's many new types of radio transmitters and receivers were developed for use during World War II. Radio signals were not only used for communication, but also to locate ships and planes (RADAR) and to jam enemy radio communications. Because of the immediate need, this equipment was hurriedly installed on ships and planes resulting in severe EMC problems.

Experiences with electromagnetic compatibility problems during the war prompted the development of the first joint Army‑Navy RFI standard, JAN‑I‑225, "Radio Interference Measurement," published in 1945. Much more attention was devoted to RFI problems in general, and techniques for grounding, shielding and filtering in particular. Electromagnetic compatibility became an engineering specialization in a manner similar to antenna design or communications theory.

In 1954, the first Armour Research Foundation Conference on Radio Frequency Interference was held. This annual conference was sponsored by both government and industry. Three years later, the Professional Group on Radio Frequency Interference was established as the newest of several professional groups of the Institute of Radio Engineers. Today, this group is known as the Electromagnetic Compatibility Society of the Institute of Electrical and Electronics Engineers (IEEE).

During the 1960's, electronic devices and systems became an increasingly important part of our society and were crucial to our national defense. A typical aircraft carrier, for example, employed 35 radio transmitters, 56 radio receivers, 5 radars, 7 navigational aid systems, and well over 100 antennas [1]. During the Vietnam War, Navy ships were often forced to shut down critical systems in order to allow other systems to function. This alarming situation focused even more attention on the issue of electromagnetic compatibility. Outside the military, an increasing dependence on computers, satellites, telephones, radio and television made potential susceptibility to electromagnetic phenomena a very serious concern.

The 1970's witnessed the development of the microprocessor and the proliferation of small, low‑cost, low‑power semiconductor devices. Circuits utilizing these devices were much more sensitive to weak electromagnetic fields than the older vacuum tube circuits. As a result, more attention was directed toward solving an increasing number of electromagnetic susceptibility problems that occurred with these circuits.

In addition to traditional radiated electromagnetic susceptibility (RES) problems due to intentional and unintentional radio frequency transmitters, three classes of electromagnetic susceptibility problems gained prominence in the '70s. Perhaps the most familiar of these is electrostatic discharge (ESD). An electrostatic discharge occurs whenever two objects with a significantly different electric potential come together. The "shock" that is felt when a person reaches for a door knob after walking across a carpet on a dry day is a common example. Even discharges too weak to be felt however, are capable of destroying semiconductor devices.

Another electromagnetic susceptibility problem that gained notoriety during the '70s was referred to as EMP or ElectroMagnetic Pulse. The military realized that a high‑altitude detonation of a nuclear warhead would generate an extremely intense pulse of electromagnetic energy over a very wide area. This pulse could easily damage or disable critical electronic systems. To address this concern, a significant effort was initiated to develop shielding and surge protection techniques that would protect critical systems in this very severe environment.

The emergence of a third electromagnetic susceptibility problem, power line transient susceptibility (PLT), was also a direct consequence of the increased use of semiconductor devices. Vacuum tube circuits generally required huge power supplies that tended to isolate the electronics from noise on the power line. High‑speed, low‑power semiconductor devices on the other hand were much more sensitive to transients and their modest power requirements often resulted in the use of relatively small low‑cost supplies that did not provide much isolation from the power line. In addition, the low cost of these devices meant that more of them were being located in homes and offices where the power distribution is generally not well regulated and is relatively noisy.

The emphasis on electromagnetic susceptibility during the 1970's is exemplified by the number of task groups, test procedures, and product standards dealing with susceptibility that emerged during this decade. One organization established in the late 70's known as the EOS/ESD Association (EOS is an acronym for electrical overstress) deals exclusively with the susceptibility problems mentioned above.

Another change that occurred during the 60's and 70's was the gradual displacement of the term RFI by the more general term EMI or Electromagnetic Interference. Since not all interference problems occurred at radio frequencies, this was considered to be a more descriptive nomenclature. EMI is often categorized as radiated EMI or conducted EMI depending on the coupling path.

Two events in the 1980's had significant, wide‑ranging effects on the field of electromagnetic compatibility.

- The introduction and proliferation of low priced personal computers and workstations.

- Revisions to Part 15 of the FCC Rules and Regulations that placed limits on the electromagnetic emissions from computing devices.

The proliferation of low priced computers was important for two reasons. First, a large number of consumers and manufacturers were introduced to a product that was both a significant source and receptor of electromagnetic compatibility problems. Secondly, the availability of low cost, high speed computation spurred the development of a variety of numerical analysis techniques that have had an overwhelming influence on the ability of engineers to analyze and solve EMC problems.

The FCC regulations governing EMI from computing devices were phased in between 1980 and 1982. They required all electronic devices operating at frequencies of 9 kHz or greater and employing "digital techniques" to meet stringent limits regulating the electromagnetic emissions radiated by the device or coupled to the power lines. Virtually all computers and computer peripherals sold or advertised for sale in the U.S. must meet these requirements. Many other countries established similar requirements.

In the 1990's, the European Union adopted EMC regulations that went well beyond the FCC requirements. The European regulations limited unintentional emissions from appliances, medical equipment and a wide variety of electronic devices that were exempt from the FCC requirements. In addition, the European Union established requirements for the electromagnetic immunity of these devices and defined procedures for testing the susceptibility of electronic systems to radiated electromagnetic fields, conducted power and signal line noise, and electrostatic discharge.

The impact of these regulations was overwhelming. At a time when the market for computers was growing exponentially, many of the latest, most advanced designs were being held back because they were unable to meet government EMC requirements. Companies formed EMC departments and advertised for EMC engineers. An entire industry emerged to supply shielding materials, ferrites, and filters to computer companies. EMC short courses, test labs, magazines, and consultants began appearing throughout the world. The international attention focused on EMC encouraged additional research, and significant progress was made toward the development of more comprehensive test procedures and meaningful standards.

In the past 20 years, several technology trends have had a profound impact on the relevance of EMC and the tools available to ensure it. The emergence of the Internet of Things has resulted in exponential growth in the number of electronic systems that need to function reliably in increasingly complex electromagnetic environments. The introduction of autonomous vehicles and society’s greater dependence on computers to ensure public safety has resulted in a greater emphasis on the reliability of electronic systems. There is less room for error when it comes to specifying meaningful EMC requirements and designing products that are guaranteed to meet those requirements.

Fortunately, the past 20 years have also resulted in significant breakthroughs to aid engineers in their efforts to anticipate and correct potential EMC problems. Aided by increasingly sophisticated electromagnetic modeling tools, researchers have developed a much greater understanding of the coupling mechanisms responsible for EMC issues. Models have been developed that can anticipate worst-case scenarios and assist with the development of products that are guaranteed to meet their EMC requirements. There have also been significant technology advancements related to the components and materials available to reduce or eliminate unwanted electromagnetic coupling. Examples include new lightweight and low-cost shielding materials employing nanostructures, thinner and more effective absorbing materials, smaller passive filter components, more effective transient suppression components and more sophisticated digital devices capable of reduced emissions and greater electromagnetic immunity.

The Future of Electromagnetic Compatibility

Today, the trends of the past 20 years are continuing. Computing devices are getting denser, faster, more complex and more pervasive, creating new challenges for the EMC engineer. At the same time, advances in electromagnetic analysis and available design options are revolutionizing the methods used to ensure compliance with EMC requirements.

Government and industry regulations and test procedures related to electromagnetic compatibility continue to be introduced and updated on a regular basis. Nevertheless, the rapid pace of technical innovation basically ensures that regulations alone will never be sufficient to guarantee the safety and compatibility of electronic systems. This makes it more important than ever to address electromagnetic compatibility issues early in the design, rather than “fixing” a product after it fails to meet a given requirement.